Proof of Humanity

Goodhart's law for authenticity.

At some point recently, being human became something you have to prove. A style of sentence you’ve written for years, that you picked up from reading too much Didion or Tim Urban suddenly looks suspicious. Someone posts a long note or essay and before you consider what it says, you wonder if they even wrote it.

Every creative act now comes with a second job: authenticating the creative act.

Writers are starting to post AI detector scores like purity seals: 100% Human Written. It’s anticipatory defense or subtle flex or both. We've flipped from default trust to default mistrust and from default confidence to fear. I find it quietly sad.

And yet, I’ve indulged too. I’ve run others’ writing and my own through the tests. I’ve felt the satisfaction of validating something bad as AI, and I’ve been shocked by words I spilled out in a fit of inspirational rage being marked suspicious. (I proceeded to seek out another detector for a second opinion like I was on trial.)

The ugly truth is that the moment you run your work through a detector you’ve given up something. You’re not asking if it’s good anymore but if it passes. And you’re implicitly saying that if it doesn’t, you’ll change it until it does.

The detector becomes the editor.

Goodhart’s Law says when a measure becomes a target, it ceases to be a good measure. Detectors can’t definitively distinguish between someone who used AI and someone who just hasn’t shed the human patterns that AI has learned to imitate. So over-trained styles are eliminated, and writers skew toward today’s ‘human-safe’ zones.

Of course not all slop is AI. Some is just human slop. But the detectors collapse a spectrum of quality (bad, derivative, formulaic, brilliant) into a binary: human or not. The moment one essay needs a seal, every essay without one becomes suspect.

II

The AI detector economy is obviously loving the AI acceleration. Selling shovels. Thematically, VCs are filing these tools under ‘cognitive security.’ Tools that offer protection from AI slop, from manipulation, and from bigger threats surely on the horizon.

Substack just added a slop button. And on Twitter, people now tag AI detection tools when they encounter sloppish posts. It’s a tech-assisted public trial on demand.

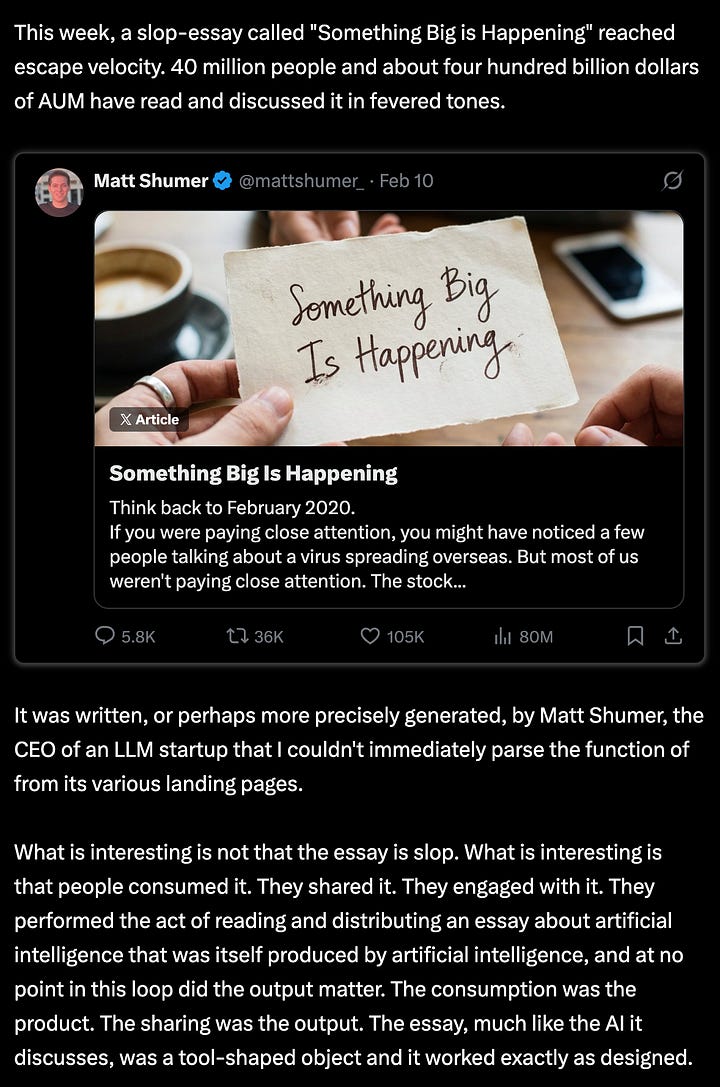

Twitter’s $1M Article contest opened a firehose of longform essays, and a good portion of them were suspicious. The now infamous essay, Something Big Is Happening, hit 80 million views by analogizing AI to COVID. Its stated purpose was to educate normies but the visible purpose was reach.

Will Manidis cited it as a kind of Tool-Shaped Object — AI slop that served its purpose by existing — it gave people something to talk about on a topic they’re primed to believe is timely and important, without anyone having to think too hard. “The consumption was the product. The sharing was the output.” Yet the most prominent AI detector on Twitter scored the essay 100% human written.

Then Trung Phan called out Aakash Gupta for posting dozens of longposts a day on trending topics. Nikita called it “very good slop” (yet the account is under review for automation). Gupta insists he writes them by hand.

The public AI detection phase already started on Substack. Every few months a fast-growing writer triggers the neighborhood sleuths. Stepfanie Tyler’s essay on taste went mega-viral and the big reaction was to label it AI-generated. She leaned in with a follow up essay — It’s My Party and I’ll Use AI If I Want To. Honestly, smart.

III

People get mad. It fascinates me to think about why. The underlying frustration isn’t “this is fake.” It’s more like “I gave you my attention and you didn’t earn it.” I spent my time reading this and you spent zero time writing it. The insult is the effort gap. Failing to tell me you used AI is just dishonesty added on top. Two things have changed with AI tools going mainstream: the effort to make things has gone down and our ability to gauge proof of work (effort legibility) has too.

You could argue none of this should matter. That quality is quality regardless of method. But that’s not how human attention works. Attention is a relationship, not a transaction.

And that suggests the bigger question goes beyond AI:

What do we owe each other when we ask for someone’s attention?

And how does the contract change when effort becomes invisible?

The plastic surgery analogy is helpful here. Nobody’s mad that someone looks good. They’re mad if they feel deceived. Maybe they compared themselves to someone who wasn’t “natural” or gave a compliment that wasn’t earned (whatever that has historically meant). But our culture has mostly gotten over this. Plastic surgery and the full spectrum of aesthetic treatments are far less secretive and far less taboo now. The one big difference: beauty is taken in passively but reading asks the audience to participate and to buy-in. More investment is more betrayal.

This is also why the discourse triangulates to detection instead of quality. Quality is more subjective and hence harder to weaponize. An AI verdict gives language to a frustration that’s really about something deeper.

IV

In the movie Inception, corporations hire teams to break into people’s dreams and steal their secrets. So the targets are forced to hire dream security — guards trained to detect and repel intruders. But secret extraction is a distraction from the real threat: inception — planting an idea so deep in a person’s subconscious that the person thinks it was theirs. The detectors plant something too: doubt.

Suspicion machines run on doubt and produce more of it.

We are all humanity detectors and we always have been. We’ve absorbed the culture of lip syncing, steroids, ghostwriters, and yes, plastic surgery. Fakery is cheap now. We’ve been burned enough that suspicion seems rational. And we’ve always loved catching fakes. The AI detector is the latest tool in a very old game.

What we’re really doing now is ‘othering’ AI to protect the value of human work. Because if we don’t, we’d have to depend on merit alone and on humans to recognize it. Merit is a scary place to live. And we don’t trust our eyes now.

So we invent proxies for ‘real.’ We optimize for them. They work briefly, then become legible, then become solved games. Then we invent new tests. The cycle repeats, and faster each time, because new technology makes both the catching up and the counterfeiting progressively easier.

Every new proof of authenticity becomes the new minimum. The new floor eats the old ceiling. The pattern is always the same: the moment authenticity becomes legible, it becomes gameable. It’s Goodhart’s law for authenticity.

V

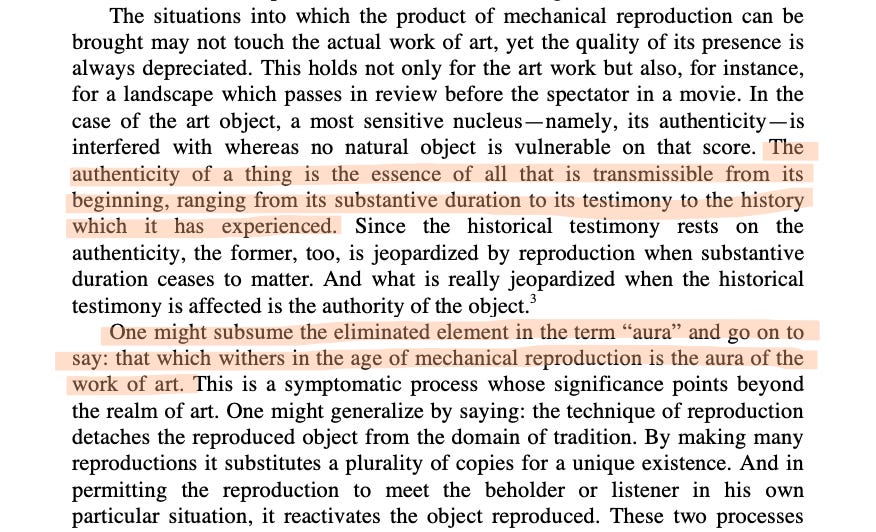

Walter Benjamin argued that mechanical reproduction erodes aura. We’ve moved past that. We’re now in the age of mechanically reproducing aura itself, so we’ve moved on to looking for the next rung on the ladder of proof of humanity.

Aura used to be embedded in the object. Now it has to be embedded in the person. The personalization of proof is the new frontier.

Effort was once legible — proof of work — but that’s fast eroding. Next comes proof of process or proof of craft. We’ve taken to performed transparency. Sharing off-duty looks and behind the scenes content. Founders building in public. If a tree falls in the forest and no one’s around to stream it, did it fall? So we live stream it. We kill all latency between in and out. We satisfy the suspicious.

But only for a while. Once transparency is legible, it too becomes a costume.

The infrastructure of proof erodes our capacity for belief. We forget what it felt like to consume without suspecting or create without bracing for suspicion. But performance isn't the enemy. We've always performed. The question is whether the performance serves something real or just simulates it.

If effort is now diminished, if it can be hidden, and proof can or soon will be gamed too, if transparency itself can be performed — what’s left?

The only thing left that can’t be gamed is wanting something badly enough that it shows. Desire is the closest thing to proof of authenticity we have left.

Desire resists this because it’s too high-dimensional to fake well. You can perform any single signal of it at any single moment. But the full pattern across time, contexts, and situations unseen is too complex to be manufactured.

Not borrowed desire or socially acceptable desire or safe ambition. Honest and visible desire. The kind that proves itself yours. Independent, embarrassing, raw. To want something visibly is to risk failing visibly. That’s why most people hedge. They want things privately and with plausible deniability built in. And beyond public desire, you need a why, a living story that takes shape in private desire.

When Timothée Chalamet says he's on a quest for greatness, you can argue it's sincere or strategic. It doesn't matter. You believe he wants it. There's no reason to fake that desire, we think, only to hide it. And like it or not, this is why people label Donald Trump authentic too. His whole personality is telling you what he desires and telling you why he deserves to get it. You can hate what he wants and hope he doesn't get it, but you cannot deny that he wants it.

Duration is the final filter. People chasing rewards leave when rewards dry up. Sustained obsession can’t be faked. If you do fake it long enough to become indistinguishable from someone who has it, you’re not faking anymore.

Just don’t show me a test that says you’re real. The only thing I know when you show me a 100% Human score is that you wanted me to know you were authentic. All you’ve proven is your desire to prove something. The most human trait of all.

In the end, authenticity is a lot like love. You can say the words. You can perform the gestures. But you can’t prove it. The proof is in our experience of the thing itself — or it’s nowhere. The moment we can’t recognize humanity without a machine to verify it, we’ve already lost ours.

If you enjoyed this essay, consider sharing it with a friend or community that might enjoy it too. Email me here or DM via Substack or Twitter / X.